Disclaimer: I do not build database engines. I build web applications. I run 4-6 different projects every year, so I build a lot of web applications. I see apps with different requirements and different data storage needs. I’ve deployed most of the data stores you’ve heard about, and a few that you probably haven’t.

I’ve picked the wrong one a few times. This is a story about one of those times — why we picked it originally, how we discovered it was wrong, and how we recovered. It all happened on an open source project called Diaspora.

The project

Diaspora is a distributed social network with a long history. Waaaaay back in early 2010, four undergraduates from New York University made a Kickstarter video asking for $10,000 to spend the summer building a distributed alternative to Facebook. They sent it out to friends and family, and hoped for the best.

But they hit a nerve. There had just been another Facebook privacy scandal, and when the dust settled on their Kickstarter, they had raised over $200,000 from 6400 different people for a software project that didn’t yet have a single line of code written.

Diaspora was the first Kickstarter project to vastly overrun its goal. As a result, they got written up in the New York Times – which turned into a bit of a scandal, because the chalkboard in the backdrop of the team photo had a dirty joke written on it, and no one noticed until it was actually printed. In the NEW YORK TIMES. The fallout from that was actually how I first heard about the project.

As a result of their Kickstarter success, the guys left school and came out to San Francisco to start writing code. They ended up in my office. I was working at Pivotal Labs at the time, and one of the guys’ older brothers also worked there, so Pivotal offered them free desk space, internet, and, of course, access to the beer fridge. I worked with official clients during the day, then hung out with them after work and contributed code on weekends.

They ended up staying at Pivotal for more than two years. By the end of that first summer, though, they already had a minimal but working (for some definition) implementation of a distributed social network built in Ruby on Rails and backed by MongoDB.

That’s a lot of buzzwords. Let’s break it down.

“Distributed social network”

If you’ve seen the Social Network, you know everything you need to know about Facebook. It’s a web app, it runs on a single logical server, and it lets you stay in touch with people. Once you log in, Diaspora’s interface looks structurally similar to Facebook’s:

A screenshot of the Diaspora user interface

There’s a feed in the middle showing all your friends’ posts, and some other random stuff along the sides that no one has ever looked at. The main technical difference between Diaspora and Facebook is invisible to end users: it’s the “distributed” part.

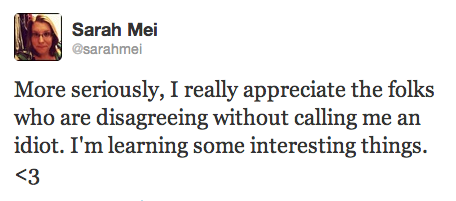

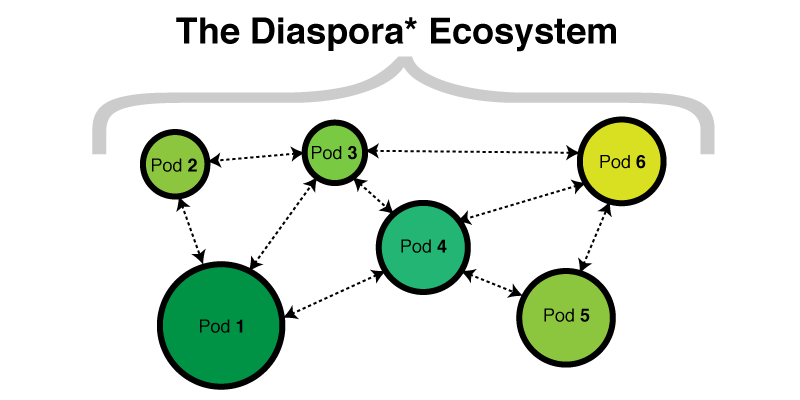

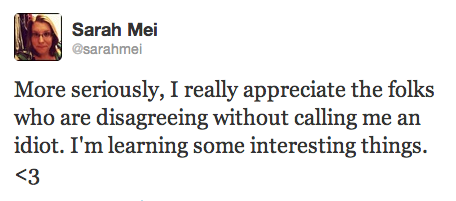

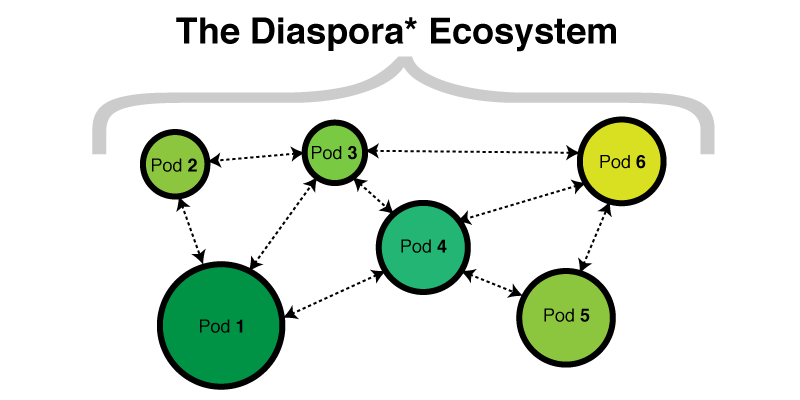

The Diaspora infrastructure is not located behind a single web address. There are hundreds of independent Diaspora servers. The code is open source, so if you want to, you can stand up your own server. Each server, called a pod, has its own database and its own set of users, and will interoperate with all the other Diaspora pods that each have their own database and set of users.

Pods of different sizes communicate with each other, without a central hub.

Each pod communicates with the others through an HTTP-based API. Once you set up an account on a pod, it’ll be pretty boring until you follow some other people. You can follow other users on your pod, and you can also follow people who are users on other pods. When someone you follow on another pod posts an update, here’s what happens:

1. The update goes into the author’s pod’s database.

2. Your pod is notified over the API.

3. The update is saved in your pod’s database.

4. You look at your activity feed and see that post mixed in with posts from the other people you follow.

Comments work the same way. On any single post, some comments might be from people on the same pod as the post’s author, and some might be from people on other pods. Everyone who has permission to see the post sees all the comments, just as you would expect if everyone were on a single logical server.

Who cares?

There are technical and legal advantages to this architecture. The main technical advantage is fault tolerance.

Here is a very important fault tolerant system that every office should have.

If any one of the pods goes down, it doesn’t bring the others down. The system survives, and even expects, network partitioning. There are some interesting political implications to that — for example, if you’re in a country that shuts down outgoing internet to prevent access to Facebook and Twitter, your pod running locally still connects you to other people within your country, even though nothing outside is accessible.

The main legal advantage is server independence. Each pod is a legally separate entity, governed by the laws of wherever it’s set up. Each pod also sets their own terms of service. On most of them, you can post content without giving up your rights to it, unlike on Facebook. Diaspora is free software both in the “gratis” and the “libre” sense of the term, and most of the people who run pods care deeply about that sort of thing.

So that’s the architecture of the system. Let’s look at the architecture within a single pod.

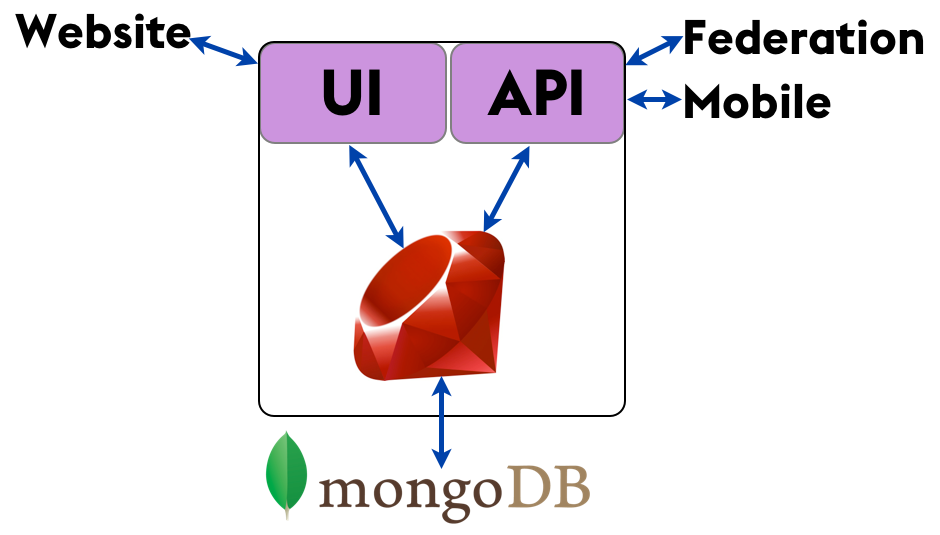

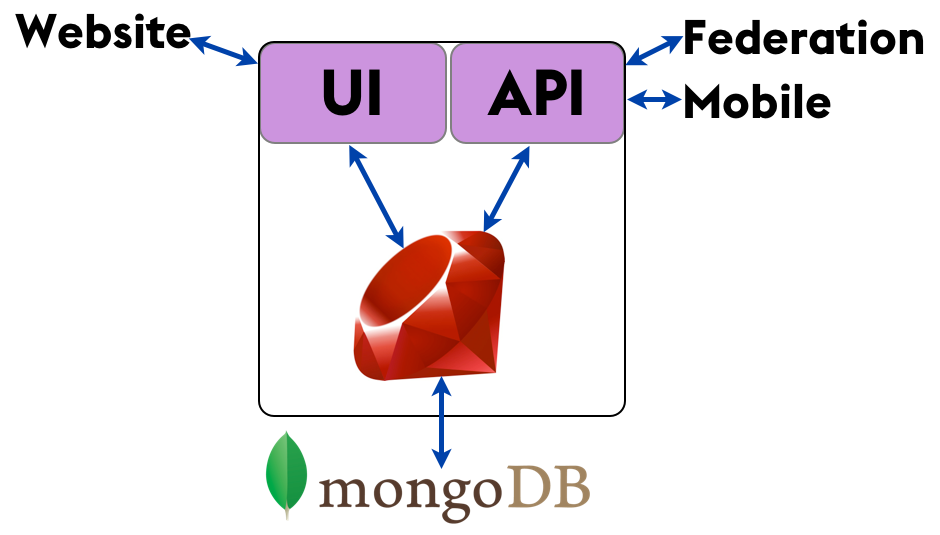

It’s a Rails app.

Each pod is a Ruby on Rails web application backed by a database, originally MongoDB. In some ways the codebase is a ‘typical’ Rails app — it has both a visual and programmatic UI, some Ruby code, and a database. But in other ways it is anything but typical.

The internal structure of one Diaspora pod

The visual UI is of course how website users interact with Diaspora. The API is used by various Diaspora mobile clients — that part’s pretty typical — but it’s also used for “federation,” which is the technical name for inter-pod communication. (I asked where the Romulans’ access point was once, and got a bunch of blank looks. Sigh.) So the distributed nature of the system adds layers to the codebase that aren’t present in a typical app.

And of course, MongoDB is an atypical choice for data storage. The vast majority of Rails applications are backed by PostgreSQL or (less often these days) MySQL.

So that’s the code. Let’s consider what kind of data we’re storing.

I Do Not Think That Word Means What You Think That Means

“Social data” is information about our network of friends, their friends, and their activity. Conceptually, we do think about it as a network — an undirected graph in which we are in the center, and our friends radiate out around us.

Photos all from rubyfriends.com. Thanks Matt Rogers, Steve Klabnik, Nell Shamrell, Katrina Owen, Sam Livingston-Grey, Josh Susser, Akshay Khole, Pradyumna Dandwate, and Hephzibah Watharkar for contributing to #rubyfriends!

When we store social data, we’re storing that graph topology, as well as the activity that moves along those edges.

For quite a few years now, the received wisdom has been that social data is not relational, and that if you store it in a relational database, you’re doing it wrong.

But what are the alternatives? Some folks say graph databases are more natural, but I’m not going to cover those here, since graph databases are too niche to be put into production. Other folks say that document databases are perfect for social data, and those are mainstream enough to actually be used. So let’s look at why people think social data fits more naturally in MongoDB than in PostgreSQL.

How MongoDB Stores Data

MongoDB is a document-oriented database. Instead of storing your data in tables made out of individual rows, like a relational database does, it stores your data in collections made out of individual documents. In MongoDB, a document is a big JSON blob with no particular format or schema.

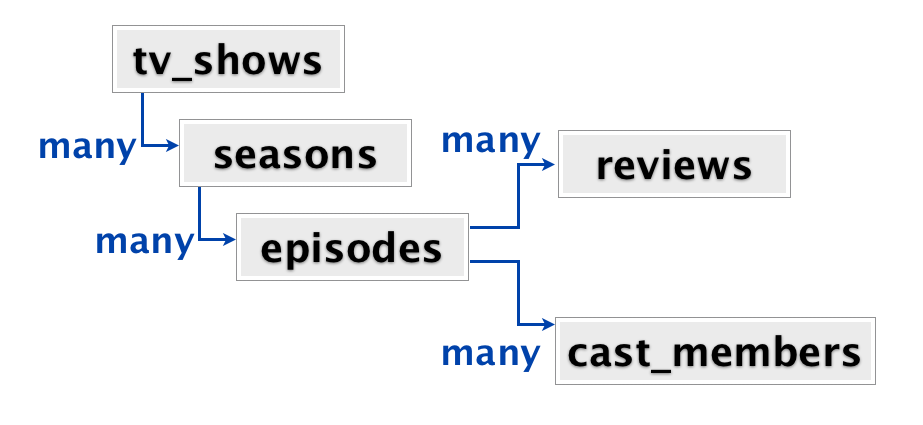

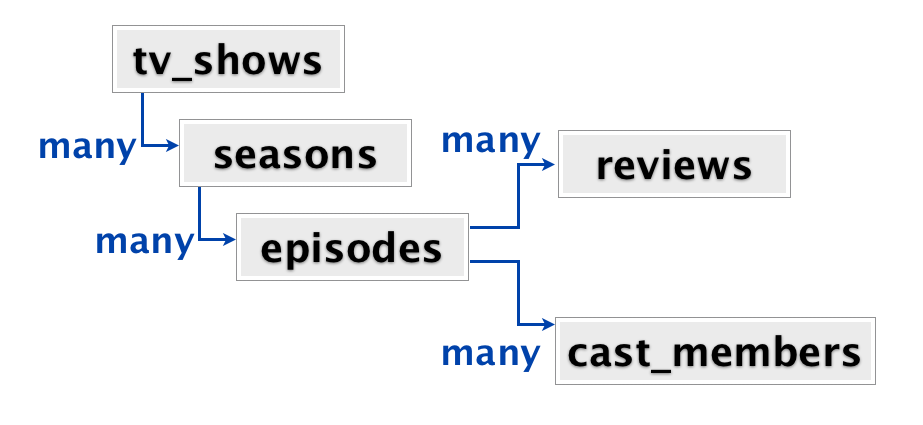

Let’s say you have a set of relationships like this that you need to model. This is quite similar to a project that come through Pivotal that used MongoDB, and was the best use case I’ve ever seen for a document database.

At the root, we have a set of TV shows. Each show has many seasons, each season has many episodes, and each episode has many reviews and many cast members. When users come into this site, typically they go directly to the page for a particular TV show. On that page they see all the seasons and all the episodes and all the reviews and all the cast members from that show, all on one page. So from the application perspective, when the user visits a page, we want to retrieve all of the information connected to that TV show.

There are a number of ways you could model this data. In a typical relational store, each of these boxes would be a table. You’d have a tv_shows table, a seasons table with a foreign key into tv_shows, an episodes table with a foreign key into seasons, and reviews and cast_members tables with foreign keys into episodes. So to get all the information for a TV show, you’re looking at a five-table join.

We could also model this data as a set of nested hashes. The set of information about a particular TV show is one big nested key/value data structure. Inside a TV show, there’s an array of seasons, each of which is also a hash. Within each season, an array of episodes, each of which is a hash, and so on. This is how MongoDB models the data. Each TV show is a document that contains all the information we need for one show.

Here’s an example document for one TV show, Babylon 5.

It’s got some title metadata, and then it’s got an array of seasons. Each season is itself a hash with metadata and an array of episodes. In turn, each episode has some metadata and arrays for both reviews and cast members.

It’s basically a huge fractal data structure.

Sets of sets of sets of sets. Tasty fractals.

All of the data we need for a TV show is under one document, so it’s very fast to retrieve all this information at once, even if the document is very large. There’s a TV show here in the US called “General Hospital” that has aired over 12,000 episodes over the course of 50+ seasons. On my laptop, PostgreSQL takes about a minute to get denormalized data for 12,000 episodes, while retrieval of the equivalent document by ID in MongoDB takes a fraction of a second.

So in many ways, this application presented the ideal use case for a document store.

Ok. But what about social data?

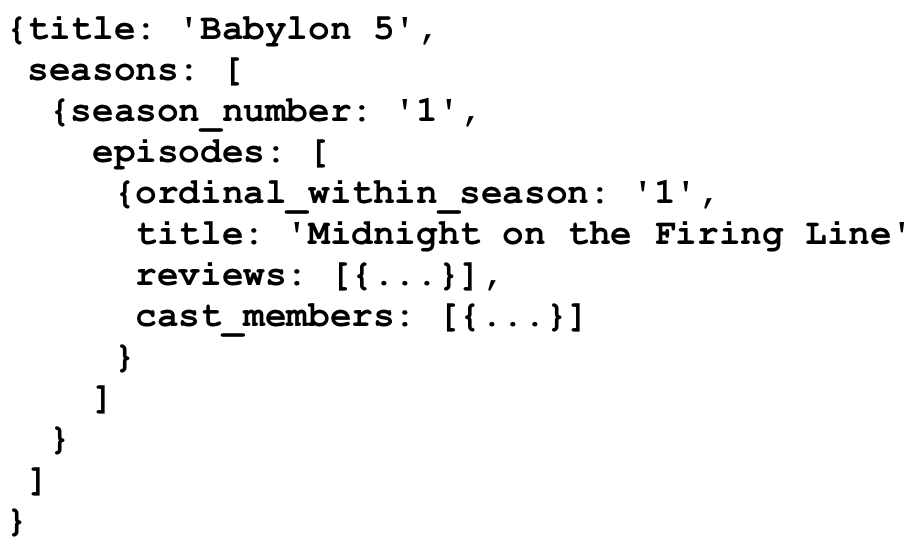

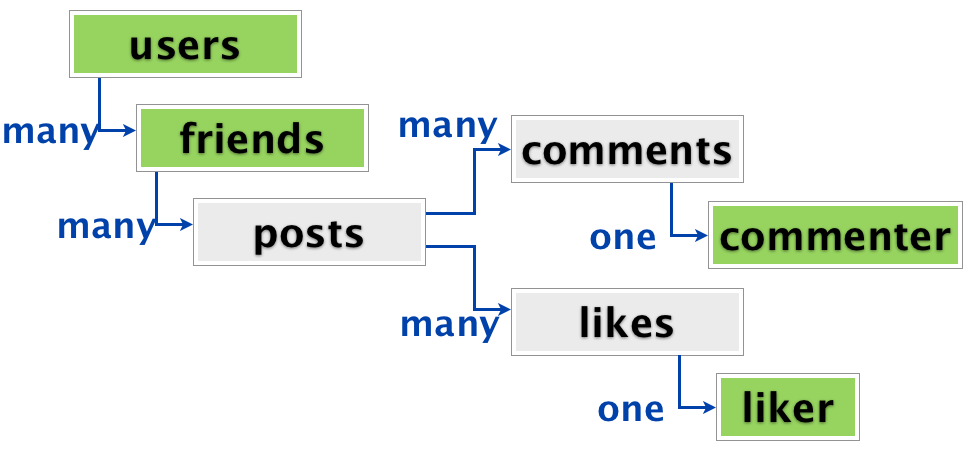

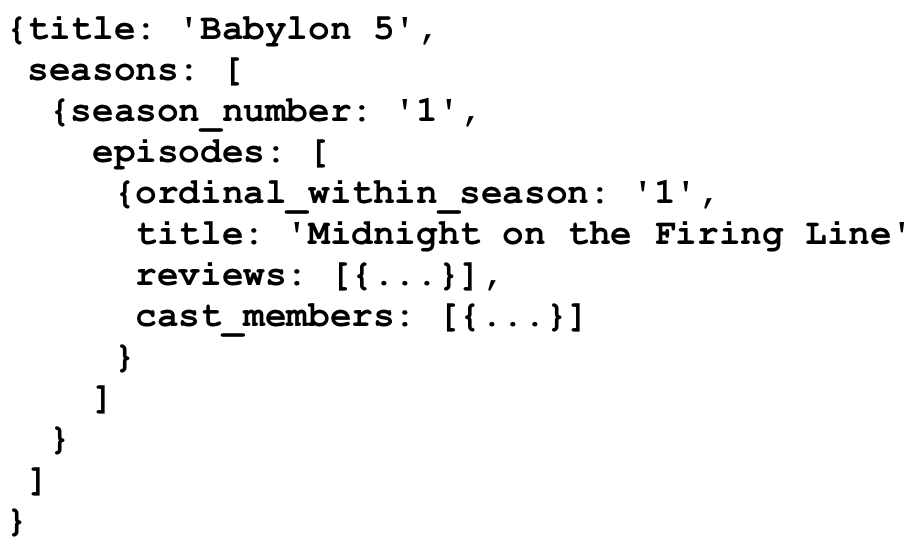

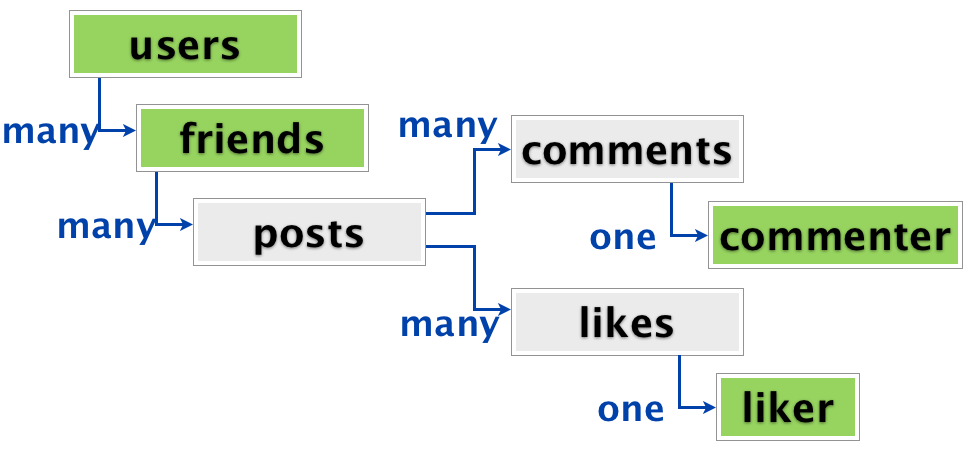

Right. When you come to a social networking site, there’s only one important part of the page: your activity stream. The activity stream query gets all of the posts from the people you follow, ordered by most recent. Each of those posts have nested information within them, such as photos, likes, reshares, and comments.

The nested structure of activity stream data looks very similar to what we were looking at with the TV shows.

Users have friends, friends have posts, posts have comments and likes, each comment has one commenter and each like has one liker. Relationship-wise, it’s not a whole lot more complicated than TV shows. And just like with TV shows, we want to pull all this data at once, right after the user logs in. Furthermore, in a relational store, with the data fully normalized, it would be a seven-table join to get everything out.

Seven-table joins. Ugh. Suddenly storing each user’s activity stream as one big denormalized nested data structure, rather than doing that join every time, seems pretty attractive.

In 2010, when the Diaspora team was making this decision, Etsy’s articles about using document stores were quite influential, although they’ve since publicly moved away from MongoDB for data storage. Likewise, at the time, Facebook’s Cassandra was also stirring up a lot of conversation about leaving relational databases. Diaspora chose MongoDB for their social data in this zeitgeist. It was not an unreasonable choice at the time, given the information they had.

What could possibly go wrong?

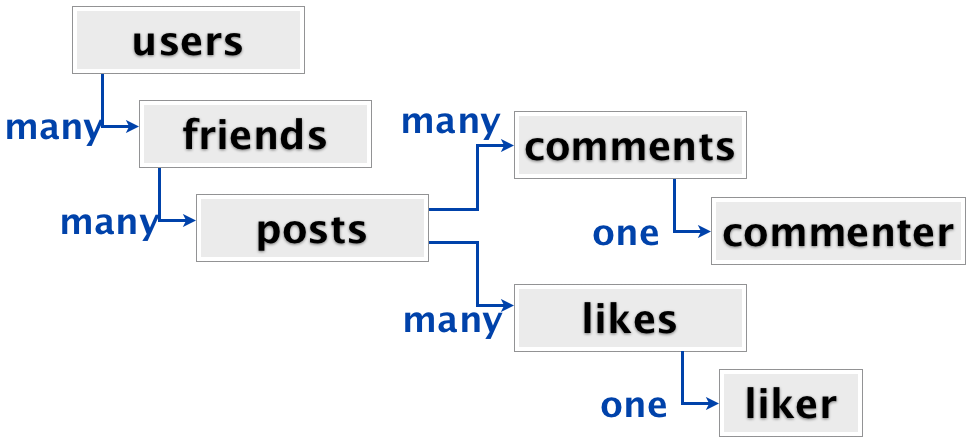

There is a really important difference between Diaspora’s social data and the Mongo-ideal TV show data that no one noticed at first.

With TV shows, each box in the relationship diagram is a different type. TV shows are different from seasons are different from episodes are different from reviews are different from cast members. None of them is even a sub-type of another type.

But with social data, some of the boxes in the relationship diagram are the same type. In fact, all of these green boxes are the same type — they are all Diaspora users.

A user has friends, and each friend may themselves be a user. Or, they may not, because it’s a distributed system. (That’s a whole layer of complexity that I’m just skipping for today.) In the same way, commenters and likers may also be users.

This type duplication makes it way harder to denormalize an activity stream into a single document. That’s because in different places in your document, you may be referring to the same concept — in this case, the same user. The user who liked that post in your activity stream may also be the user who commented on a different post.

Duplicate data Duplicate data

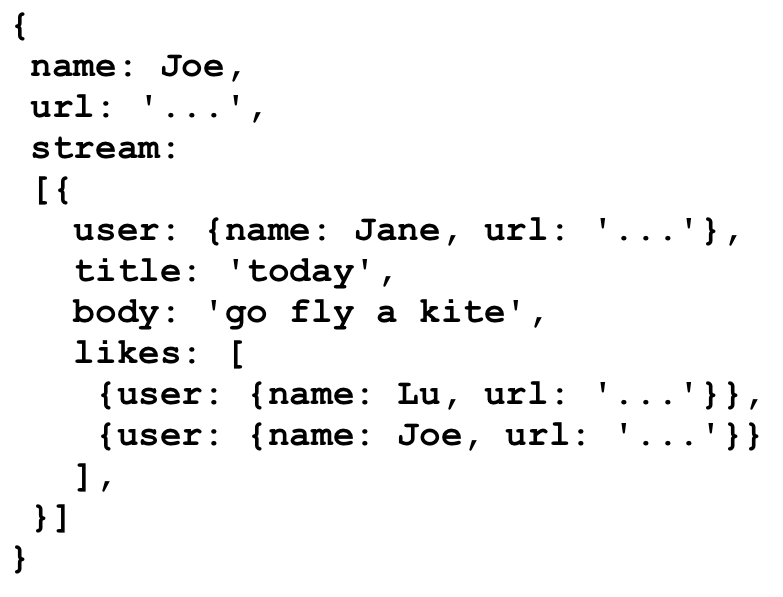

We can represent this in MongoDB in a couple of different ways. Duplication is any easy option. All the information for that friend is copied and saved to the like on the first post, and then a separate copy is saved to the comment on the second post. The advantage is that all the data is present everywhere you need it, and you can still pull the whole activity stream back as a single document.

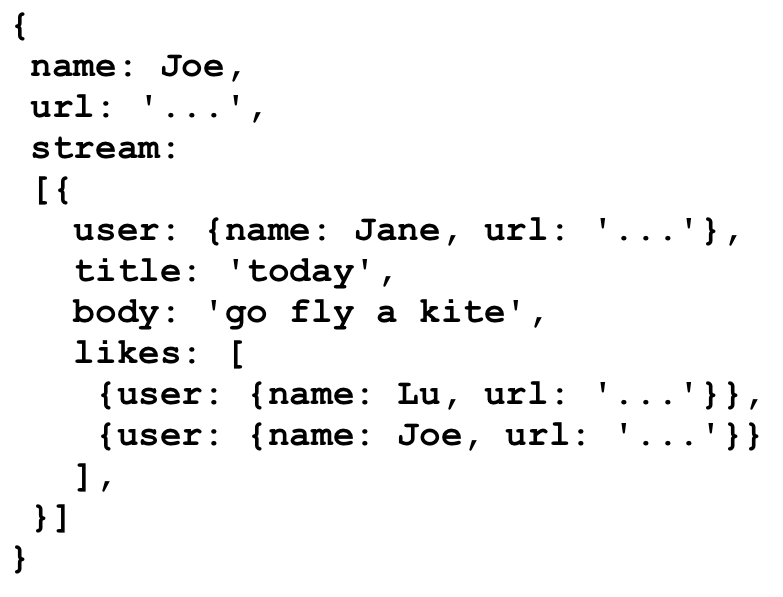

Here’s what this kind of fully denormalized stream document looks like.

Here we have copies of user data inlined. This is Joe’s stream, and it has a copy of his user data, including his name and URL, at the top level. His stream, just underneath, contains Jane’s post. Joe has liked Jane’s post, so under likes for Jane’s post, we have a separate copy of Joe’s data.

You can see why this is attractive: all the data you need is already located where you need it.

You can also see why this is dangerous. Updating a user’s data means walking through all the activity streams that they appear in to change the data in all those different places. This is very error-prone, and often leads to inconsistent data and mysterious errors, particularly when dealing with deletions.

Is there no hope?

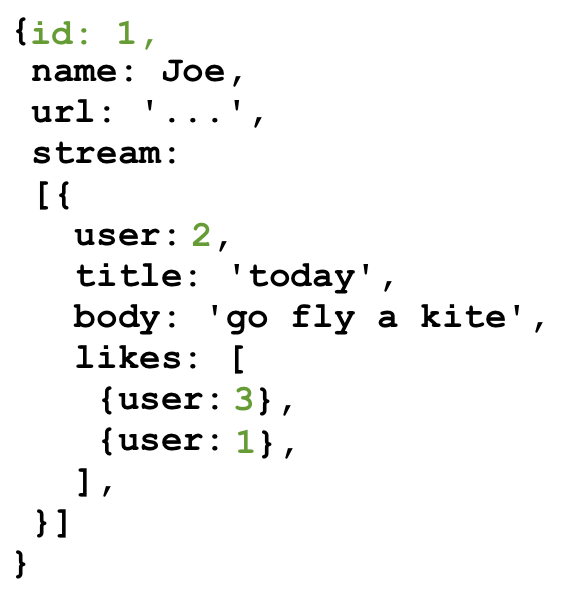

There is another approach you can take to this problem in MongoDB, which will more familiar if you have a relational background. Instead of duplicating user data, you can store references to users in the activity stream documents.

With this approach, instead of inlining this user data wherever you need it, you give each user an ID. Once users have IDs, we store the user’s ID every place that we were previously inlining data. New IDs are in green below.

MongoDB actually uses BSON IDs, which are strings sort of like GUIDs, but to make these samples easier to read I’m just using integers.

This eliminates our duplication problem. When user data changes, there’s only one document that gets rewritten. However, we’ve created a new problem for ourselves. Because we’ve moved some data out of the activity streams, we can no longer construct an activity stream from a single document. This is less efficient and more complex. Constructing an activity stream now requires us to 1) retrieve the stream document, and then 2) retrieve all the user documents to fill in names and avatars.

What’s missing from MongoDB is a SQL-style join operation, which is the ability to write one query that mashes together the activity stream and all the users that the stream references. Because MongoDB doesn’t have this ability, you end up manually doing that mashup in your application code, instead.

Simple Denormalized Data

Let’s return to TV shows for a second. The set of relationships for a TV show don’t have a lot of complexity. Because all the boxes in the relationship diagram are different entities, the entire query can be denormalized into one document with no duplication and no references. In this document database, there are no links between documents. It requires no joins.

On a social network, however, nothing is that self-contained. Any time you see something that looks like a name or a picture, you expect to be able to click on it and go see that user, their profile, and their posts. A TV show application doesn’t work that way. If you’re on season 1 episode 1 of Babylon 5, you don’t expect to be able to click through to season 1 episode 1 of General Hospital.

Don’t. Link. The. Documents.

Once we started doing ugly MongoDB joins manually in the Diaspora code, we knew it was the first sign of trouble. It was a sign that our data was actually relational, that there was value to that structure, and that we were going against the basic concept of a document data store.

Whether you’re duplicating critical data (ugh), or using references and doing joins in your application code (double ugh), when you have links between documents, you’ve outgrown MongoDB. When the MongoDB folks say “documents,” in many ways, they mean things you can print out on a piece of paper and hold. A document may have internal structure — headings and subheadings and paragraphs and footers — but it doesn’t link to other documents. It’s a self-contained piece of semi-structured data.

If your data looks like that, you’ve got documents. Congratulations! It’s a good use case for Mongo. But if there’s value in the links between documents, then you don’t actually have documents. MongoDB is not the right solution for you. It’s certainly not the right solution for social data, where links between documents are actually the most critical data in the system.

So social data isn’t document-oriented. Does that mean it’s actually…relational?

That Word Again

When people say “social data isn’t relational,” that’s not actually what they mean. They mean one of these two things:

1. “Conceptually, social data is more of a graph than a set of tables.”

This is absolutely true. But there are actually very few concepts in the world that are naturally modeled as normalized tables. We use that structure because it’s efficient, because it avoids duplication, and because when it does get slow, we know how to fix it.

2. “It’s faster to get all the data from a social query when it’s denormalized into a single document.”

This is also absolutely true. When your social data is in a relational store, you need a many-table join to extract the activity stream for a particular user, and that gets slow as your tables get bigger. However, we have a well-understood solution to this problem. It’s called caching.

At the All Your Base Conf in Oxford earlier this year, where I gave the talk version of this post, Neha Narula had a great talk about caching that I recommend you watch once it’s posted. In any case, caching in front of a normalized data store is a complex but well-understood problem. I’ve seen projects cache denormalized activity stream data into a document database like MongoDB, which makes retrieving that data much faster. The only problem they have then is cache invalidation.

“There are only two hard problems in computer science: cache invalidation and naming things.”

Phil Karlton

It turns out cache invalidation is actually pretty hard. Phil Karlton wrote most of SSL version 3, X11, and OpenGL, so he knows a thing or two about computer science.

Cache Invalidation As A Service

But what is cache invalidation, and why is it so hard?

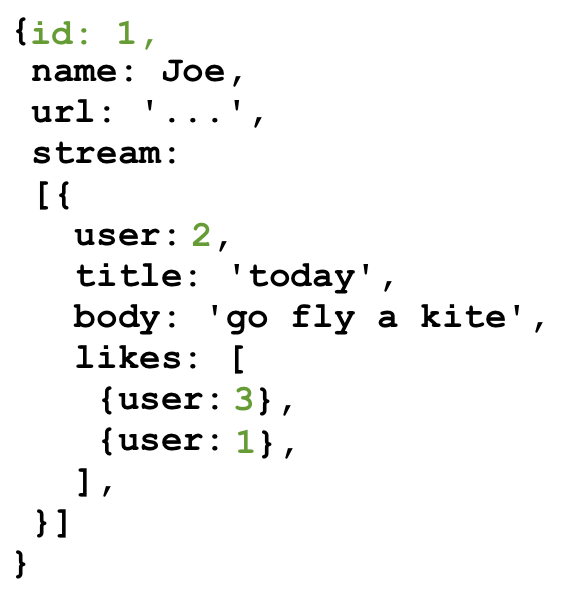

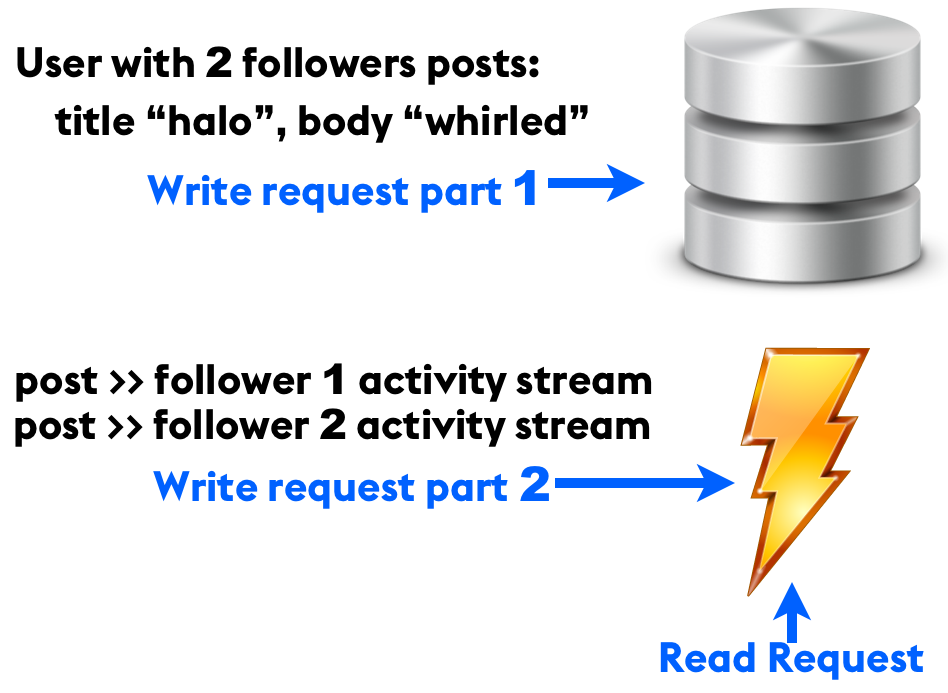

Cache invalidation is just knowing when a piece of your cached data is out of date, and needs to be updated or replaced. Here’s a typical example that I see every day in web applications. We have a backing store, typically PostgreSQL or MySQL, and then in front of that we have a caching layer, typically Memcached or Redis. Requests to read a user’s activity stream go to the cache rather than the database directly, which makes them very fast.

Typical cache and backing store setup

Application writes are more complicated. Let’s say a user with two followers writes a new post. The first thing that happens (part 1) is that the post data is copied into the backing store. Once that completes, a background job (part 2) appends that post to the cached activity stream of both of the users who follow the author.

This pattern is quite common. Twitter holds recently-active users’ activity streams in an in-memory cache, which they append to when someone they follow posts something. Even smaller applications that employ some kind of activity stream will typically end up here (see: seven-table join).

Back to our example. When the author changes an existing post, the update process is essentially the same as for a create, except instead of appending to the cache, it updates an item that’s already there.

What happens if that step 2 background job fails partway through? Machines get rebooted, network cables get unplugged, applications restart. Instability is the only constant in our line of work. When that happens, you’ll end up with invalid data in your cache. Some copies of the post will have the old title, and some copies will have the new title. That’s a hard problem, but with a cache, there’s always the nuclear option.

Always an option >_<

You can always delete the entire activity stream record out of your cache and regenerate it from your consistent backing store. It may be slow, but at least it’s possible.

What if there is no backing store? What if you skip step 1? What if the cache is all you have?

When MongoDB is all you have, it’s a cache with no backing store behind it. It will become inconsistent. Not eventually consistent — just plain, flat-out inconsistent, for all time. At that point, you have no options. Not even a nuclear one. You have no way to regenerate the data in a consistent state.

When Diaspora decided to store social data in MongoDB, we were conflating a database with a cache. Databases and caches are very different things. They have very different ideas about permanence, transience, duplication, references, data integrity, and speed.

The Conversion

Once we figured out that we had accidentally chosen a cache for our database, what did we do about it?

Well, that’s the million dollar question. But I’ve already answered the billion-dollar question. In this post I’ve talked about how we used MongoDB vs. how it was designed to be used. I’ve talked about it as though all that information were obvious, and the Diaspora team just failed to research adequately before choosing.

But this stuff wasn’t obvious at all. The MongoDB docs tell you what it’s good at, without emphasizing what it’s not good at. That’s natural. All projects do that. But as a result, it took us about six months, a lot of user complaints, and a lot of investigation to figure out that we were using MongoDB the wrong way.

There was nothing to do but take the data out of MongoDB and move it to a relational store, dealing as best we could with the inconsistent data we uncovered along the way. The data conversion itself — export from MongoDB, import to MySQL — was straightforward. For the mechanical details, you can see my slides from All Your Base Conf 2013.

The Damage

We had eight months of production data, which turned into about 1.2 million rows in MySQL. We spent four pair-weeks developing the code for the conversion, and when we pulled the trigger, the main site had about two hours of downtime. That was more than acceptable for a project that was in pre-alpha. We could have reduced that downtime more, but we had budgeted for eight hours of downtime, so two actually seemed fantastic.

NOT BAD

Epilogue

Remember that TV show application? It was the perfect use case for MongoDB. Each show was one document, perfectly self-contained. No references to anything, no duplication, and no way for the data to become inconsistent.

About three months into development, it was still humming along nicely on MongoDB. One Monday, at the weekly planning meeting, the client told us about a new feature that one of their investors wanted: when they were looking at the actors in an episode of a show, they wanted to be able to click on an actor’s name and see that person’s entire television career. They wanted a chronological listing of all of the episodes of all the different shows that actor had ever been in.

We stored each show as a document in MongoDB containing all of its nested information, including cast members. If the same actor appeared in two different episodes, even of the same show, their information was stored in both places. We had no way to tell, aside from comparing the names, whether they were the same person. So to implement this feature, we needed to search through every document to find and de-duplicate instances of the actor that the user clicked on. Ugh. At a minimum, we needed to de-dup them once, and then maintain an external index of actor information, which would have the same invalidation issues as any other cache.

You See Where This Is Going

The client expected this feature to be trivial. If the data had been in a relational store, it would have been. As it was, we first tried to convince the PM they didn’t need it. After that failed, we offered some cheaper alternatives, such as linking to an IMDB search for the actor’s name. The company made money from advertising, though, so they wanted users to stay on their site rather than going off to IMDB.

This feature request eventually prompted the project’s conversion to PostgreSQL. After a lot more conversation with the client, we realized that the business saw lots of value in linking TV shows together. They envisioned seeing other shows a particular director had been involved with, and episodes of other shows that were released the same week this one was, among other things.

This was ultimately a communication problem rather than a technical problem. If these conversations had happened sooner, if we had taken the time to really understand how the client saw the data and what they wanted to do with it, we probably would have done the conversion earlier, when there was less data, and it was easier.

Always Be Learning

I learned something from that experience: MongoDB’s ideal use case is even narrower than our television data. The only thing it’s good at is storing arbitrary pieces of JSON. “Arbitrary,” in this context, means that you don’t care at all what’s inside that JSON. You don’t even look. There is no schema, not even an implicit schema, as there was in our TV show data. Each document is just a blob whose interior you make absolutely no assumptions about.

At RubyConf this weekend, I ran into Conrad Irwin, who suggested this use case. He’s used MongoDB to store arbitrary bits of JSON that come from customers through an API. That’s reasonable. The CAP theorem doesn’t matter when your data is meaningless. But in interesting applications, your data isn’t meaningless.

I’ve heard many people talk about dropping MongoDB in to their web application as a replacement for MySQL or PostgreSQL. There are no circumstances under which that is a good idea. Schema flexibility sounds like a great idea, but the only time it’s actually useful is when the structure of your data has no value. If you have an implicit schema — meaning, if there are things you are expecting in that JSON — then MongoDB is the wrong choice. I suggest taking a look at PostgreSQL’s hstore (now apparently faster than MongoDB anyway), and learning how to make schema changes. They really aren’t that hard, even in large tables.

Find The Value

When you’re picking a data store, the most important thing to understand is where in your data — and where in its connections — the business value lies. If you don’t know yet, which is perfectly reasonable, then choose something that won’t paint you into a corner. Pushing arbitrary JSON into your database sounds flexible, but true flexibility is easily adding the features your business needs.

Make the valuable things easy.

The End.

Thanks for reading! Let me sum up how I feel about comments on this post: